Fiber-optic-modules.com Offers

FE SFP, OC3 SFP, OC12 SFP, OC48 SFP, GIGABIT SFP, PON SFP, VIDEO SFP Modules.

Include WDM, CWDM, DWDM。

Friday, November 30, 2012

Friday, November 9, 2012

Commonly used in the optical module of the Ethernet switch

SFP, GBIC, XFP, XENPAK. Their full name in English, Chinese name is not used, can be as simple as understanding the

SFP: Small Form-factor Pluggable transceiver, small form-factor pluggable transceiver

GBIC: GigaBit Interface Converter, Gigabit Ethernet interface converter

XFP: 10-Gigabit small Form-factor Pluggable transceiver Gigabit Ethernet interface small form-factor pluggable transceiver

XENPAK: a collection of 10-Gigabit EtherNet the Transceiver PAcKage Gigabit Ethernet interface transceiver package

In accordance with the rate: Ethernet applications 100Base (Fast) 1000Base (Gigabit) 10GE SDH applications 155M, 622M, 2.5G, 10G

According to the package points: 1 × 9, SFF, SFP, GBIC, XENPAK, XFP, package see

1 × 9 package - welding optical module, the general speed is not higher than gigabit, multi-SC interface

SFF package - welding small package optical modules, general speed is not higher than gigabit, multi-LC interface

GBIC Package - hot-swappable Gigabit Interface optical module, SC connector

SFP package - hot-plug small package module, the maximum data rate of up to 4G, the use of LC interface

XENPAK package - applications in the Gigabit Ethernet SC interface

XFP package - 10G optical modules that can be used in Gigabit Ethernet, SONET and other systems.

the use of LC interface To sub: LED, VCSEL, FP LD, DFB L

in accordance with the type of laser Points in accordance with the emission wavelength: 850 nm, 1310nm, 1550nm, etc. Use: non-hot-plug (1 × 9, SFF), hot-swappable (GBIC, SFP, XENPAK, XFP)

Sunday, October 14, 2012

Fiber optic modules supplier

There is a website about fiber optic modules. You can findout the information what you want.

WebSiteUrl:http://www.fiber-optic-modules.com

Or you can visit it click fiber optic modules

Shenzhen Sopto Technology Co., Limited referred to Sopto, is a

professional fiber optic communications technology company which engaged

in manufacturing and marketing fiber optic products. It is located in

Shenzhen City, Guangdong Province, China. Sopto has large factories and

one trading company. The factories mainly produce fiber optic modules,

twinax cables, media converter, PCI-E card, FTTH and fiber optic the

patch cords and other related passive products.

After years of development, we formed the operation pattern of four

core self-produce products mentioned above, supplemented by a variety of

superior products. We have Rohs, CE, FC, SGS certification, provide

fiber-optic communications agents, retailers, and telecommunications

operators worldwide high quality and reliable fiber optic products,

including fiber optic modules, Media converters and chassis, PDH Fiber

Optical Multiplexer and Protocol Converters, GEPON and GPON Solution,

Splitter CWDM DWDM, PCI-E Cards, Fiber Optical Patch Cords and Cables,

Fiber Optical Adaptor and Attenuators and so on. What’s worth to noting

is that our fiber optic modules are compatible with Cisco, 3com, Juniper

and other brands of fiber optic equipment.

WebSiteUrl:http://www.fiber-optic-modules.com

Or you can visit it click fiber optic modules

Monday, May 7, 2012

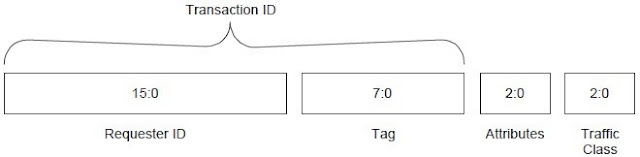

Transaction Descriptor Overview

The Transaction Descriptor is a mechanism for carrying Transaction information between the

Requester and the Completer. Transaction Descriptors are composed of three fields:

Transaction ID – identifies outstanding Transactions

Attributes field – specifies characteristics of the Transaction

Traffic Class (TC) field – associates Transaction with type of required service

Figure 2-12 shows the fields of the Transaction Descriptor. Note that these fields are shown

together to highlight their relationship as parts of a single logical entity. The fields are not

contiguous in the packet header.

Requester and the Completer. Transaction Descriptors are composed of three fields:

Transaction ID – identifies outstanding Transactions

Attributes field – specifies characteristics of the Transaction

Traffic Class (TC) field – associates Transaction with type of required service

Figure 2-12 shows the fields of the Transaction Descriptor. Note that these fields are shown

together to highlight their relationship as parts of a single logical entity. The fields are not

contiguous in the packet header.

Figure 2-12: Transaction Descriptor

Sunday, May 6, 2012

First/Last DW Byte Enables Rules

Byte Enables are included with Memory, I/O, and Configuration Requests. This section defines the corresponding rules. Byte Enables, when present in the Request header, are located in byte 7 of the header (see Figure 2-11). For Memory Read Requests that have the TH bit Set, the Byte Enable fields are repurposed to carry the ST[7:0] field, and values for the Byte Enables are implied as defined below. Such Requests must only be issued when it is acceptable to complete the Requests as if all bytes for requested payload were enabled.

For Memory Reads that have the TH bit Set, the following values are implied for the Byte

Enables.

• If the Length field for this Request indicates a length of 1 DW, then the value for the 1st DW

Byte Enables is implied to be 1111b and the value for the Last DW Byte Enables is implied

to be 0000b.

• If the Length field for this Request indicates a length of greater than 1 DW, then the value

for the 1st DW Byte Enables and the Last DW Byte Enables is implied to be 1111b.

The 1st DW BE[3:0] field contains Byte Enables for the first (or only) DW referenced by a

Request.

• If the Length field for a Request indicates a length of greater than 1 DW, this field must not

equal 0000b.

The Last DW BE[3:0] field contains Byte Enables for the last DW of a Request.

• If the Length field for a Request indicates a length of 1 DW, this field must equal 0000b.

• If the Length field for a Request indicates a length of greater than 1 DW, this field must not

equal 0000b.

For each bit of the Byte Enables fields:

• a value of 0b indicates that the corresponding byte of data must not be written or, if nonprefetchable, must not be read at the Completer.

• a value of 1b indicates that the corresponding byte of data must be written or read at the

Completer.

Non-contiguous Byte Enables (enabled bytes separated by non-enabled bytes) are permitted in the 1st DW BE field for all Requests with length of 1 DW.

• Non-contiguous Byte Enable examples: 1010b, 0101b, 1001b, 1011b, 1101b

Non-contiguous Byte Enables are permitted in both Byte Enables fields for QW aligned

Memory Requests with length of 2 DW (1 QW).

All non-QW aligned Memory Requests with length of 2 DW (1 QW) and Memory Requests with

length of 3 DW or more must enable only bytes that are contiguous with the data between the first and last DW of the Request.

• Contiguous Byte Enables examples:

1st DW BE: 1100b, Last DW BE: 0011b

1st DW BE: 1000b, Last DW BE: 0111b

Table 2-9 shows the correspondence between the bits of the Byte Enables fields, their location in the Request header, and the corresponding bytes of the referenced data.

A Write Request with a length of 1 DW with no bytes enabled is permitted, and has no effect at the Completer.

If a Read Request of 1 DW specifies that no bytes are enabled to be read (1st DW BE[3:0] field = 0000b), the corresponding Completion must specify a Length of 1 DW, and include a data payload of 1 DW

• The contents of the data payload within the Completion packet is unspecified and may be

any value

Receiver/Completer behavior is undefined for a TLP violating the Byte Enables rules specified in this section.

Receivers may optionally check for violations of the Byte Enables rules specified in this section. If a Receiver implementing such checks determines that a TLP violates one or more Byte Enables rules, the TLP is a Malformed TLP

• If Byte Enables rules are checked, a violation is a reported error associated with the

Receiving Port (see Section 6.2)

The flush semantic has wide application, and all Completers must implement the functionality associated with this semantic. Because a Requester may use the flush semantic without comprehending the characteristics of the Completer, Completers must ensure that zero-length reads do not have side-effects. This is really just a specific case of the rule that in a non-prefetchable space, non-enabled bytes must not be read at the Completer. Note that the flush applies only to traffic in the same Traffic Class as the zero-length Read.

For Memory Reads that have the TH bit Set, the following values are implied for the Byte

Enables.

• If the Length field for this Request indicates a length of 1 DW, then the value for the 1st DW

Byte Enables is implied to be 1111b and the value for the Last DW Byte Enables is implied

to be 0000b.

• If the Length field for this Request indicates a length of greater than 1 DW, then the value

for the 1st DW Byte Enables and the Last DW Byte Enables is implied to be 1111b.

The 1st DW BE[3:0] field contains Byte Enables for the first (or only) DW referenced by a

Request.

• If the Length field for a Request indicates a length of greater than 1 DW, this field must not

equal 0000b.

The Last DW BE[3:0] field contains Byte Enables for the last DW of a Request.

• If the Length field for a Request indicates a length of 1 DW, this field must equal 0000b.

• If the Length field for a Request indicates a length of greater than 1 DW, this field must not

equal 0000b.

For each bit of the Byte Enables fields:

• a value of 0b indicates that the corresponding byte of data must not be written or, if nonprefetchable, must not be read at the Completer.

• a value of 1b indicates that the corresponding byte of data must be written or read at the

Completer.

Non-contiguous Byte Enables (enabled bytes separated by non-enabled bytes) are permitted in the 1st DW BE field for all Requests with length of 1 DW.

• Non-contiguous Byte Enable examples: 1010b, 0101b, 1001b, 1011b, 1101b

Non-contiguous Byte Enables are permitted in both Byte Enables fields for QW aligned

Memory Requests with length of 2 DW (1 QW).

All non-QW aligned Memory Requests with length of 2 DW (1 QW) and Memory Requests with

length of 3 DW or more must enable only bytes that are contiguous with the data between the first and last DW of the Request.

• Contiguous Byte Enables examples:

1st DW BE: 1100b, Last DW BE: 0011b

1st DW BE: 1000b, Last DW BE: 0111b

Table 2-9 shows the correspondence between the bits of the Byte Enables fields, their location in the Request header, and the corresponding bytes of the referenced data.

A Write Request with a length of 1 DW with no bytes enabled is permitted, and has no effect at the Completer.

If a Read Request of 1 DW specifies that no bytes are enabled to be read (1st DW BE[3:0] field = 0000b), the corresponding Completion must specify a Length of 1 DW, and include a data payload of 1 DW

• The contents of the data payload within the Completion packet is unspecified and may be

any value

Receiver/Completer behavior is undefined for a TLP violating the Byte Enables rules specified in this section.

Receivers may optionally check for violations of the Byte Enables rules specified in this section. If a Receiver implementing such checks determines that a TLP violates one or more Byte Enables rules, the TLP is a Malformed TLP

• If Byte Enables rules are checked, a violation is a reported error associated with the

Receiving Port (see Section 6.2)

The flush semantic has wide application, and all Completers must implement the functionality associated with this semantic. Because a Requester may use the flush semantic without comprehending the characteristics of the Completer, Completers must ensure that zero-length reads do not have side-effects. This is really just a specific case of the rule that in a non-prefetchable space, non-enabled bytes must not be read at the Completer. Note that the flush applies only to traffic in the same Traffic Class as the zero-length Read.

Friday, May 4, 2012

Routing and Addressing Rules

There are three principal mechanisms for TLP routing: address, ID, and implicit. This section defines the rules for the address and ID routing mechanisms. Implicit routing is used only with Message Requests, and is covered in Section 2.2.8.

Address routing is used with Memory and I/O Requests.

Figure 2-8: 32-bit Address Routing

Address Based Routing Rules

Address routing is used with Memory and I/O Requests.

Two address formats are specified, a 64-bit format used with a 4 DW header (see Figure 2-7) and a 32-bit format used with a 3 DW header (see Figure 2-8).

Figure 2-8: 32-bit Address Routing

For Memory Read, Memory Write, and AtomicOp Requests, the Address Type (AT) field is encoded as shown in Table 2-5, with full descriptions contained in the Address Translation Services Specification, Revision 1.0. For all other Requests, the AT field is reserved.

Table 2-5: Address Type (AT) Field Encodings

Address mapping to the TLP header is shown in Table 2-6.

Table 2-6: Address Field Mapping

Memory Read, Memory Write, and AtomicOp Requests can use either format.

• For Addresses below 4 GB, Requesters must use the 32-bit format. The behavior of the receiver is not specified if a 64-bit format request addressing below 4 GB (i.e., with the upper 32 bits of address all 0) is received.

I/O Read Requests and I/O Write Requests use the 32-bit format.

All agents must decode all address bits in the header - address aliasing is not allowed.

• For Addresses below 4 GB, Requesters must use the 32-bit format. The behavior of the receiver is not specified if a 64-bit format request addressing below 4 GB (i.e., with the upper 32 bits of address all 0) is received.

I/O Read Requests and I/O Write Requests use the 32-bit format.

All agents must decode all address bits in the header - address aliasing is not allowed.

ID Based Routing Rules

ID routing is used with Configuration Requests,with ID Routed Messages, and with

Completions. This specification defines Vendor_Defined Messages that are ID Routed (Section 2.2.8.6). Other specifications define additional ID Routed Messages.

Completions. This specification defines Vendor_Defined Messages that are ID Routed (Section 2.2.8.6). Other specifications define additional ID Routed Messages.

ID routing uses the Bus, Device, and Function Numbers (as applicable) to specify the destination for the TLP:

• For non-ARI Routing IDs, Bus, Device, and (3-bit) Function Number to TLP header

mapping is shown in Table 2-7.

• For ARI Routing IDs, the Bus and (8-bit) Function Number to TLP header mapping is shown in Table 2-8.

• For non-ARI Routing IDs, Bus, Device, and (3-bit) Function Number to TLP header

mapping is shown in Table 2-7.

• For ARI Routing IDs, the Bus and (8-bit) Function Number to TLP header mapping is shown in Table 2-8.

Two ID routing formats are specified, one used with a 4 DW header (see Figure 2-9) and one used with a 3 DW header (see Figure 2-10).

• Header field locations are the same for both formats, and are given in Table 2-7

• Header field locations are the same for both formats, and are given in Table 2-7

Table 2-7: Header Field Locations for non-ARI ID Routing

Table 2-8: Header Field Locations for ARI ID Routing

Figure 2-9: ID Routing with 4 DW Header

Figure 2-10: ID Routing with 3 DW Header

Thursday, May 3, 2012

TLP Digest Rules

For any TLP, a value of 1b in the TD field indicates the presence of the TLP Digest field including an ECRC value at the end of the TLP

• A TLP where the TD field value does not correspond with the observed size (accounting for the data payload, if present) is a Malformed TLP

♦ This is a reported error associated with the Receiving Port (see Section 6.2)

If an intermediate or ultimate PCI Express Receiver of the TLP does not support ECRC checking, the Receiver must ignore the TLP Digest4

• If the Receiver of the TLP supports ECRC checking, the Receiver interprets the value in the TLP Digest field as an ECRC value, according to the rules in Section 2.7.1

• A TLP where the TD field value does not correspond with the observed size (accounting for the data payload, if present) is a Malformed TLP

♦ This is a reported error associated with the Receiving Port (see Section 6.2)

If an intermediate or ultimate PCI Express Receiver of the TLP does not support ECRC checking, the Receiver must ignore the TLP Digest4

• If the Receiver of the TLP supports ECRC checking, the Receiver interprets the value in the TLP Digest field as an ECRC value, according to the rules in Section 2.7.1

Sunday, April 22, 2012

TLPs with Data Payloads - Rules

Length is specified as an integral number of DW

Length[9:0] is reserved for all Messages except those which explicitly refer to a Data Length

• Refer to the Message Code tables in Section 2.2.8.

The Transmitter of a TLP with a data payload must not allow the data payload length as given

by the TLP’s Length [ ] field to exceed the length specified by the value in the

Max_Payload_Size field of the Transmitter’s Device Control register taken as an integral number

of DW (see Section 7.8.4).

• For ARI Devices, the Max_Payload_Size is determined solely by the setting in Function 0.

The Max_Payload_Size settings in other Functions are ignored.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are identical across all Functions, a transmitted TLP’s data

payload must not exceed the common Max_Payload_Size setting.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are not identical across all Functions, a transmitted TLP’s data

payload must not exceed a Max_Payload_Size setting whose determination is

implementation specific.

♦ Transmitter implementations are encouraged to use the Max_Payload_Size setting from

the Function that generated the transaction, or else the smallest Max_Payload_Size

setting across all Functions.

♦ Software should not set the Max_Payload_Size in different Functions to different values

unless software is aware of the specific implementation.

• Note: Max_Payload_Size applies only to TLPs with data payloads; Memory Read Requests

are not restricted in length by Max_Payload_Size. The size of the Memory Read Request is

controlled by the Length field

The size of the data payload of a Received TLP as given by the TLP’s Length [ ] field must not

exceed the length specified by the value in the Max_Payload_Size field of the Receiver’s Device

Control register taken as an integral number of DW (see Section 7.8.4).

• Receivers must check for violations of this rule. If a Receiver determines that a TLP violates

this rule, the TLP is a Malformed TLP

♦ This is a reported error associated with the Receiving Port (see Section 6.2)

• For ARI Devices, the Max_Payload_Size is determined solely by the setting in Function 0.20 The Max_Payload_Size settings in other Functions are ignored.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are identical across all Functions, the Receiver is required to

check the TLP’s data payload size against the common Max_Payload_Size setting.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are not identical across all Functions, the Receiver is required to

check the TLP’s data payload against a Max_Payload_Size setting whose determination is

implementation specific.

♦ Receiver implementations are encouraged to use the Max_Payload_Size setting from the

Function targeted by the transaction, or else the largest Max_Payload_Size setting across all Functions.

♦ Software should not set the Max_Payload_Size in different Functions to different values

unless software is aware of the specific implementation.

For TLPs, that include data, the value in the Length field and the actual amount of data included

in the TLP must match.

• Receivers must check for violations of this rule. If a Receiver determines that a TLP violates

this rule, the TLP is a Malformed TLP

♦ This is a reported error associated with the Receiving Port (see Section 6.2)

The value in the Length field applies only to data – the TLP Digest is not included in the Length

When a data payload is included in a TLP other than an AtomicOp Request or an AtomicOp

Completion, the first byte of data following the header corresponds to the byte address closest

to zero and the succeeding bytes are in increasing byte address sequence.

• Example: For a 16-byte write to location 100h, the first byte following the header would be

the byte to be written to location 100h, and the second byte would be written to location

101h, and so on, with the final byte written to location 10Fh.

The data payload in AtomicOp Requests and AtomicOp Completions must be formatted such

that the first byte of data following the TLP header is the least significant byte of the first data

value, and subsequent bytes of data are strictly increasing in significance. With CAS Requests,

the second data value immediately follows the first data value, and must be in the same format.

• The endian format used by AtomicOp Completers to read and write data at the target

location is implementation specific, and is permitted to be whatever the Completer

determines is appropriate for the target memory (e.g., little endian, big endian, etc). Endian

format capability reporting and controls for AtomicOp Completers are outside the scope of

this specification.

• Little endian example: For a 64-bit (8-byte) Swap Request targeting location 100h with the

target memory in little endian format, the first byte following the header is written to

location 100h, the second byte is written to location 101h, and so on, with the final byte

written to location 107h. Note that before performing the writes, the Completer first reads

the target memory locations so it can return the original value in the Completion. The byte

address correspondence to the data in the Completion is identical to that in the Request.

• Big endian example: For a 64-bit (8-byte) Swap Request targeting location 100h with the

target memory in big endian format, the first byte following the header is written to location

107h, the second byte is written to location 106h, and so on, with the final byte written to

location 100h. Note that before performing the writes, the Completer first reads the target

memory locations so it can return the original value in the Completion. The byte address

correspondence to the data in the Completion is identical to that in the Request.

• Figure 2-6 shows little endian and big endian examples of Completer target memory access

for a 64-bit (8-byte) FetchAdd. The bytes in the operands and results are numbered 0-7,

with byte 0 being least significant and byte 7 being most significant. In each case, the

Completer fetches the target memory operand using the appropriate endian format. Next,

AtomicOp compute logic in the Completer performs the FetchAdd operation using the

original target memory value and the “add” value from the FetchAdd Request. Finally, the

Completer stores the FetchAdd result back to target memory using the same endian format

used for the fetch.

A-0742

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

AtomicOp

compute

logic

target memory

locations

FetchAdd example with target

memory in little endian format

107h

106h

105h

104h

103h

102h

101h

100h

"add" value

FetchAdd result

original value

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

AtomicOp

compute

logic

target memory

locations

FetchAdd example with target

memory in big endian format

107h

106h

105h

104h

103h

102h

101h

100h

"add" value

FetchAdd result

original value

Figure 2-6: Examples of Completer Target Memory Access for FetchAdd

IMPLEMENTATION NOTE

Endian Format Support by RC AtomicOp Completers

One key reason for permitting an AtomicOp Completer to access target memory using an endian

format of its choice is so that PCI Express devices targeting host memory with AtomicOps can

interoperate with host software that uses atomic operation instructions (or instruction sequences).

Some host environments have limited endian format support with atomic operations, and by

supporting the “right” endian format(s), an RC AtomicOp Completer may significantly improve

interoperability.

For an RC with AtomicOp Completer capability on a platform supporting little-endian-only

processors, there is little envisioned benefit for the RC AtomicOp Completer to support any endian

format other than little endian. For an RC with AtomicOp Completer capability on a platform

supporting bi-endian processors, there may be benefit in supporting both big endian and little

endian formats, and perhaps having the endian format configurable for different regions of host

memory.

There is no PCI Express requirement that an RC AtomicOp Completer support the host processor’s

“native” format (if there is one), nor is there necessarily significant benefit to doing so. For

example, some processors can use load-link/store-conditional or similar instruction sequences to do

atomic operations in non-native endian formats and thus not need the RC AtomicOp Completer to

support alternative endian formats.

IMPLEMENTATION NOTE

Maintaining Alignment in Data Payloads

Section 2.3.1.1 discusses rules for forming Read Completions respecting certain natural address

boundaries. Memory Write performance can be significantly improved by respecting similar address

boundaries in the formation of the Write Request. Specifically, forming Write Requests such that

natural address boundaries of 64 or 128 bytes are respected will help to improve system

performance.

Length[9:0] is reserved for all Messages except those which explicitly refer to a Data Length

• Refer to the Message Code tables in Section 2.2.8.

The Transmitter of a TLP with a data payload must not allow the data payload length as given

by the TLP’s Length [ ] field to exceed the length specified by the value in the

Max_Payload_Size field of the Transmitter’s Device Control register taken as an integral number

of DW (see Section 7.8.4).

• For ARI Devices, the Max_Payload_Size is determined solely by the setting in Function 0.

The Max_Payload_Size settings in other Functions are ignored.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are identical across all Functions, a transmitted TLP’s data

payload must not exceed the common Max_Payload_Size setting.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are not identical across all Functions, a transmitted TLP’s data

payload must not exceed a Max_Payload_Size setting whose determination is

implementation specific.

♦ Transmitter implementations are encouraged to use the Max_Payload_Size setting from

the Function that generated the transaction, or else the smallest Max_Payload_Size

setting across all Functions.

♦ Software should not set the Max_Payload_Size in different Functions to different values

unless software is aware of the specific implementation.

• Note: Max_Payload_Size applies only to TLPs with data payloads; Memory Read Requests

are not restricted in length by Max_Payload_Size. The size of the Memory Read Request is

controlled by the Length field

The size of the data payload of a Received TLP as given by the TLP’s Length [ ] field must not

exceed the length specified by the value in the Max_Payload_Size field of the Receiver’s Device

Control register taken as an integral number of DW (see Section 7.8.4).

• Receivers must check for violations of this rule. If a Receiver determines that a TLP violates

this rule, the TLP is a Malformed TLP

♦ This is a reported error associated with the Receiving Port (see Section 6.2)

• For ARI Devices, the Max_Payload_Size is determined solely by the setting in Function 0.20 The Max_Payload_Size settings in other Functions are ignored.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are identical across all Functions, the Receiver is required to

check the TLP’s data payload size against the common Max_Payload_Size setting.

• For an Upstream Port associated with a non-ARI multi-Function device whose

Max_Payload_Size settings are not identical across all Functions, the Receiver is required to

check the TLP’s data payload against a Max_Payload_Size setting whose determination is

implementation specific.

♦ Receiver implementations are encouraged to use the Max_Payload_Size setting from the

Function targeted by the transaction, or else the largest Max_Payload_Size setting across all Functions.

♦ Software should not set the Max_Payload_Size in different Functions to different values

unless software is aware of the specific implementation.

For TLPs, that include data, the value in the Length field and the actual amount of data included

in the TLP must match.

• Receivers must check for violations of this rule. If a Receiver determines that a TLP violates

this rule, the TLP is a Malformed TLP

♦ This is a reported error associated with the Receiving Port (see Section 6.2)

The value in the Length field applies only to data – the TLP Digest is not included in the Length

When a data payload is included in a TLP other than an AtomicOp Request or an AtomicOp

Completion, the first byte of data following the header corresponds to the byte address closest

to zero and the succeeding bytes are in increasing byte address sequence.

• Example: For a 16-byte write to location 100h, the first byte following the header would be

the byte to be written to location 100h, and the second byte would be written to location

101h, and so on, with the final byte written to location 10Fh.

The data payload in AtomicOp Requests and AtomicOp Completions must be formatted such

that the first byte of data following the TLP header is the least significant byte of the first data

value, and subsequent bytes of data are strictly increasing in significance. With CAS Requests,

the second data value immediately follows the first data value, and must be in the same format.

• The endian format used by AtomicOp Completers to read and write data at the target

location is implementation specific, and is permitted to be whatever the Completer

determines is appropriate for the target memory (e.g., little endian, big endian, etc). Endian

format capability reporting and controls for AtomicOp Completers are outside the scope of

this specification.

• Little endian example: For a 64-bit (8-byte) Swap Request targeting location 100h with the

target memory in little endian format, the first byte following the header is written to

location 100h, the second byte is written to location 101h, and so on, with the final byte

written to location 107h. Note that before performing the writes, the Completer first reads

the target memory locations so it can return the original value in the Completion. The byte

address correspondence to the data in the Completion is identical to that in the Request.

• Big endian example: For a 64-bit (8-byte) Swap Request targeting location 100h with the

target memory in big endian format, the first byte following the header is written to location

107h, the second byte is written to location 106h, and so on, with the final byte written to

location 100h. Note that before performing the writes, the Completer first reads the target

memory locations so it can return the original value in the Completion. The byte address

correspondence to the data in the Completion is identical to that in the Request.

• Figure 2-6 shows little endian and big endian examples of Completer target memory access

for a 64-bit (8-byte) FetchAdd. The bytes in the operands and results are numbered 0-7,

with byte 0 being least significant and byte 7 being most significant. In each case, the

Completer fetches the target memory operand using the appropriate endian format. Next,

AtomicOp compute logic in the Completer performs the FetchAdd operation using the

original target memory value and the “add” value from the FetchAdd Request. Finally, the

Completer stores the FetchAdd result back to target memory using the same endian format

used for the fetch.

A-0742

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

AtomicOp

compute

logic

target memory

locations

FetchAdd example with target

memory in little endian format

107h

106h

105h

104h

103h

102h

101h

100h

"add" value

FetchAdd result

original value

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

7 6 5 4 3 2 1 0

AtomicOp

compute

logic

target memory

locations

FetchAdd example with target

memory in big endian format

107h

106h

105h

104h

103h

102h

101h

100h

"add" value

FetchAdd result

original value

Figure 2-6: Examples of Completer Target Memory Access for FetchAdd

IMPLEMENTATION NOTE

Endian Format Support by RC AtomicOp Completers

One key reason for permitting an AtomicOp Completer to access target memory using an endian

format of its choice is so that PCI Express devices targeting host memory with AtomicOps can

interoperate with host software that uses atomic operation instructions (or instruction sequences).

Some host environments have limited endian format support with atomic operations, and by

supporting the “right” endian format(s), an RC AtomicOp Completer may significantly improve

interoperability.

For an RC with AtomicOp Completer capability on a platform supporting little-endian-only

processors, there is little envisioned benefit for the RC AtomicOp Completer to support any endian

format other than little endian. For an RC with AtomicOp Completer capability on a platform

supporting bi-endian processors, there may be benefit in supporting both big endian and little

endian formats, and perhaps having the endian format configurable for different regions of host

memory.

There is no PCI Express requirement that an RC AtomicOp Completer support the host processor’s

“native” format (if there is one), nor is there necessarily significant benefit to doing so. For

example, some processors can use load-link/store-conditional or similar instruction sequences to do

atomic operations in non-native endian formats and thus not need the RC AtomicOp Completer to

support alternative endian formats.

IMPLEMENTATION NOTE

Maintaining Alignment in Data Payloads

Section 2.3.1.1 discusses rules for forming Read Completions respecting certain natural address

boundaries. Memory Write performance can be significantly improved by respecting similar address

boundaries in the formation of the Write Request. Specifically, forming Write Requests such that

natural address boundaries of 64 or 128 bytes are respected will help to improve system

performance.

Friday, April 20, 2012

Common Packet Header Fields

All Transaction Layer Packet (TLP) prefixes and headers contain the following fields (see

Figure 2-4):

Fmt[2:0] – Format of TLP (see Table 2-2) – bits 7:5 of byte 0

Type[4:0] – Type of TLP – bits 4:0 of byte 0

A-0784

Byte 0 > Fmt Type {Fields in bytes 1 through 3 depend on Fmt and Type Fields

+0 +1 +2 +3

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

Figure 2-4: Fields Present in All TLPs

The Fmt field(s) indicate the presence of one or more TLP Prefixes and the Type field(s) indicates

the associated TLP Prefix type(s).

The Fmt and Type fields of the TLP Header provide the information required to determine the size

of the remaining part of the TLP Header, and if the packet contains a data payload following the

header.

The Fmt, Type, TD, and Length fields of the TLP Header contain all information necessary to

determine the overall size of the non-prefix portion of the TLP. The Type field, in addition to

defining the type of the TLP also determines how the TLP is routed by a Switch. Different types of

TLPs are discussed in more detail in the following sections.

Permitted Fmt[2:0] and Type[4:0] field values are shown in Table 2-3.

• All other encodings are reserved (see Section 2.3).

TC[2:0] – Traffic Class (see Section 2.4.2) – bits [6:4] of byte 1

TH – 1b indicates the presence of TLP Processing Hints (TPH) in the TLP header and optional

TPH TLP Prefix (if present) – bit 0 of byte 1 (see Section 2.2.7.1)

Attr[1:0] – Attributes (see Section 2.2.6.3) – bits [5:4] of byte 2

Attr[2] – Attribute (see Section 2.2.6.3) – bit 2 of byte 1

TD – 1b indicates presence of TLP digest in the form of a single DW at the end of the TLP (see Section 2.2.3) – bit 7 of byte 2

EP – indicates the TLP is poisoned (see Section 2.7) – bit 6 of byte 2

Length[9:0] – Length of data payload in DW (see Table 2-4) – bits 1:0 of byte 2 concatenated

with bits 7:0 of byte 3

• TLP data must be 4-byte naturally aligned and in increments of 4-byte Double Words (DW).

• Reserved for TLPs that do not contain or refer to data payloads, including Cpl, CplLk, and

Messages (except as specified)

OM14540B

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

Attr TD

EP

Type R TC AT Length

+0 +1 +2 +3

Byte 0 > TH

Fmt R R

Figure 2-5: Fields Present in All TLP Headers

Table 2-2: Fmt[1:0] Field Values

Fmt[1:0] Corresponding TLP Format

000b 3 DW header, no data

001b 4 DW header, no data

010b 3 DW header, with data

011b 4 DW header, with data

100b TLP Prefix

All encodings not shown above are

reserved (see Section 2.3).

Table 2-3: Fmt[1:0] and Type[4:0] Field Encodings

TLP Type Fmt

[2:0]2

(b)

Type

[4:0]

(b)

Description

MRd 000

001

0 0000 Memory Read Request

MRdLk 000

001

0 0001 Memory Read Request-Locked

MWr 010

011

0 0000 Memory Write Request

IORd 000 0 0010 I/O Read Request

IOWr 010 0 0010 I/O Write Request

CfgRd0 000 0 0100 Configuration Read Type 0

CfgWr0 010 0 0100 Configuration Write Type 0

CfgRd1 000 0 0101 Configuration Read Type 1

CfgWr1 010 0 0101 Configuration Write Type 1

TCfgRd 000 1 1011 Deprecated TLP Type3

TCfgWr 010 1 1011 Deprecated TLP Type3

Msg 001

1 0r2r1r0 Message Request – The sub-field r[2:0]

specifies the Message routing mechanism

(see Table 2-18).

MsgD 011

1 0r2r1r0 Message Request with data payload – The

sub-field r[2:0] specifies the Message

routing mechanism (see Table 2-18).

Cpl 000 0 1010 Completion without Data – Used for I/O and

Configuration Write Completions with any

Completion Status. Also used for AtomicOp

Completions and Read Completions (I/O,

Configuration, or Memory) with Completion

Status other than Successful Completion.

CplD 010 0 1010 Completion with Data – Used for Memory,

I/O, and Configuration Read Completions.

Also used for AtomicOp Completions.

CplLk 000 0 1011 Completion for Locked Memory Read

without Data – Used only in error case.

CplDLk 010 0 1011 Completion for Locked Memory Read –

otherwise like CplD.

2 Requests with two Fmt[2:0] values shown can use either 32 bits (the first value) or 64 bits (the second value)

Addressing Packet formats.

3 Deprecated TLP Types: previously used for TCS, which is no longer supported by this specification. If a Receiver

does not implement TCS, the Receiver must treat such Requests as Malformed Packets.

TLP Type Fmt

[2:0]2

(b)

Type

[4:0]

(b)

Description

FetchAdd 010

011

0 1100 Fetch and Add AtomicOp Request

Swap 010

011

0 1101 Unconditional Swap AtomicOp Request

CAS 010

011

0 1110 Compare and Swap AtomicOp Request

LPrfx 100 0L3L2L1L0 Local TLP Prefix – The sub-field L[3:0]

specifies the Local TLP Prefix type (see

Table 2-29).

EPrfx 100 1E3E2E1E0 End-End TLP Prefix – The sub-field E[3:0]

specifies the End-End TLP Prefix type (see

Table 2-30).

All encodings not shown above are

reserved (see Section 2.3).

Table 2-4: Length[9:0] Field Encoding

Length[9:0] Corresponding TLP Data Payload Size

00 0000 0001b 1 DW

00 0000 0010b 2 DW

... ...

11 1111 1111b 1023 DW

00 0000 0000b 1024 DW

Figure 2-4):

Fmt[2:0] – Format of TLP (see Table 2-2) – bits 7:5 of byte 0

Type[4:0] – Type of TLP – bits 4:0 of byte 0

A-0784

Byte 0 > Fmt Type {Fields in bytes 1 through 3 depend on Fmt and Type Fields

+0 +1 +2 +3

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

Figure 2-4: Fields Present in All TLPs

The Fmt field(s) indicate the presence of one or more TLP Prefixes and the Type field(s) indicates

the associated TLP Prefix type(s).

The Fmt and Type fields of the TLP Header provide the information required to determine the size

of the remaining part of the TLP Header, and if the packet contains a data payload following the

header.

The Fmt, Type, TD, and Length fields of the TLP Header contain all information necessary to

determine the overall size of the non-prefix portion of the TLP. The Type field, in addition to

defining the type of the TLP also determines how the TLP is routed by a Switch. Different types of

TLPs are discussed in more detail in the following sections.

Permitted Fmt[2:0] and Type[4:0] field values are shown in Table 2-3.

• All other encodings are reserved (see Section 2.3).

TC[2:0] – Traffic Class (see Section 2.4.2) – bits [6:4] of byte 1

TH – 1b indicates the presence of TLP Processing Hints (TPH) in the TLP header and optional

TPH TLP Prefix (if present) – bit 0 of byte 1 (see Section 2.2.7.1)

Attr[1:0] – Attributes (see Section 2.2.6.3) – bits [5:4] of byte 2

Attr[2] – Attribute (see Section 2.2.6.3) – bit 2 of byte 1

TD – 1b indicates presence of TLP digest in the form of a single DW at the end of the TLP (see Section 2.2.3) – bit 7 of byte 2

EP – indicates the TLP is poisoned (see Section 2.7) – bit 6 of byte 2

Length[9:0] – Length of data payload in DW (see Table 2-4) – bits 1:0 of byte 2 concatenated

with bits 7:0 of byte 3

• TLP data must be 4-byte naturally aligned and in increments of 4-byte Double Words (DW).

• Reserved for TLPs that do not contain or refer to data payloads, including Cpl, CplLk, and

Messages (except as specified)

OM14540B

7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0 7 6 5 4 3 2 1 0

Attr TD

EP

Type R TC AT Length

+0 +1 +2 +3

Byte 0 > TH

Fmt R R

Figure 2-5: Fields Present in All TLP Headers

Table 2-2: Fmt[1:0] Field Values

Fmt[1:0] Corresponding TLP Format

000b 3 DW header, no data

001b 4 DW header, no data

010b 3 DW header, with data

011b 4 DW header, with data

100b TLP Prefix

All encodings not shown above are

reserved (see Section 2.3).

Table 2-3: Fmt[1:0] and Type[4:0] Field Encodings

TLP Type Fmt

[2:0]2

(b)

Type

[4:0]

(b)

Description

MRd 000

001

0 0000 Memory Read Request

MRdLk 000

001

0 0001 Memory Read Request-Locked

MWr 010

011

0 0000 Memory Write Request

IORd 000 0 0010 I/O Read Request

IOWr 010 0 0010 I/O Write Request

CfgRd0 000 0 0100 Configuration Read Type 0

CfgWr0 010 0 0100 Configuration Write Type 0

CfgRd1 000 0 0101 Configuration Read Type 1

CfgWr1 010 0 0101 Configuration Write Type 1

TCfgRd 000 1 1011 Deprecated TLP Type3

TCfgWr 010 1 1011 Deprecated TLP Type3

Msg 001

1 0r2r1r0 Message Request – The sub-field r[2:0]

specifies the Message routing mechanism

(see Table 2-18).

MsgD 011

1 0r2r1r0 Message Request with data payload – The

sub-field r[2:0] specifies the Message

routing mechanism (see Table 2-18).

Cpl 000 0 1010 Completion without Data – Used for I/O and

Configuration Write Completions with any

Completion Status. Also used for AtomicOp

Completions and Read Completions (I/O,

Configuration, or Memory) with Completion

Status other than Successful Completion.

CplD 010 0 1010 Completion with Data – Used for Memory,

I/O, and Configuration Read Completions.

Also used for AtomicOp Completions.

CplLk 000 0 1011 Completion for Locked Memory Read

without Data – Used only in error case.

CplDLk 010 0 1011 Completion for Locked Memory Read –

otherwise like CplD.

2 Requests with two Fmt[2:0] values shown can use either 32 bits (the first value) or 64 bits (the second value)

Addressing Packet formats.

3 Deprecated TLP Types: previously used for TCS, which is no longer supported by this specification. If a Receiver

does not implement TCS, the Receiver must treat such Requests as Malformed Packets.

TLP Type Fmt

[2:0]2

(b)

Type

[4:0]

(b)

Description

FetchAdd 010

011

0 1100 Fetch and Add AtomicOp Request

Swap 010

011

0 1101 Unconditional Swap AtomicOp Request

CAS 010

011

0 1110 Compare and Swap AtomicOp Request

LPrfx 100 0L3L2L1L0 Local TLP Prefix – The sub-field L[3:0]

specifies the Local TLP Prefix type (see

Table 2-29).

EPrfx 100 1E3E2E1E0 End-End TLP Prefix – The sub-field E[3:0]

specifies the End-End TLP Prefix type (see

Table 2-30).

All encodings not shown above are

reserved (see Section 2.3).

Table 2-4: Length[9:0] Field Encoding

Length[9:0] Corresponding TLP Data Payload Size

00 0000 0001b 1 DW

00 0000 0010b 2 DW

... ...

11 1111 1111b 1023 DW

00 0000 0000b 1024 DW

Wednesday, April 18, 2012

Transaction Layer Protocol - Packet Definition

PCI Express uses a packet based protocol to exchange information between the Transaction Layers

of the two components communicating with each other over the Link. PCI Express supports the

following basic transaction types: Memory, I/O, Configuration, and Messages. Two addressing

formats for Memory Requests are supported: 32 bit and 64 bit.Transactions are carried using Requests and Completions. Completions are used only where

required, for example, to return read data, or to acknowledge Completion of I/O and Configuration

Write Transactions. Completions are associated with their corresponding Requests by the value in

the Transaction ID field of the Packet header.

All TLP fields marked Reserved (sometimes abbreviated as R) must be filled with all 0’s when a TLP is formed. Values in such fields must be ignored by Receivers and forwarded unmodified by

Switches. Note that for certain fields there are both specified and reserved values – the handling of

reserved values in these cases is specified separately for each case.

of the two components communicating with each other over the Link. PCI Express supports the

following basic transaction types: Memory, I/O, Configuration, and Messages. Two addressing

formats for Memory Requests are supported: 32 bit and 64 bit.Transactions are carried using Requests and Completions. Completions are used only where

required, for example, to return read data, or to acknowledge Completion of I/O and Configuration

Write Transactions. Completions are associated with their corresponding Requests by the value in

the Transaction ID field of the Packet header.

All TLP fields marked Reserved (sometimes abbreviated as R) must be filled with all 0’s when a TLP is formed. Values in such fields must be ignored by Receivers and forwarded unmodified by

Switches. Note that for certain fields there are both specified and reserved values – the handling of

reserved values in these cases is specified separately for each case.

Monday, April 16, 2012

Fiber optic preform

A preform is a piece of glass used to draw an optical fiber. The

preform may consist of several pieces of a glass with different refractive indices, to provide the core and cladding of the fiber. The shape of the preform may be circular, although for some applications such as double-clad fibers another form is preferred.[61] In fiber lasers based on double-clad fiber, an asymmetric shape improves the filling factor for laser pumping.

Because of the surface tension, the shape is smoothed during the drawing process, and the shape of the resulting fiber does not reproduce the sharp edges of the preform. Nevertheless, the careful polishing of the preform is important, any defects of the preform surface affect the optical and mechanical properties of the resulting fiber. In particular, the preform for the test-fiber shown in the figure was not polished well, and the cracks are seen with confocal optical microscope.

Because of the surface tension, the shape is smoothed during the drawing process, and the shape of the resulting fiber does not reproduce the sharp edges of the preform. Nevertheless, the careful polishing of the preform is important, any defects of the preform surface affect the optical and mechanical properties of the resulting fiber. In particular, the preform for the test-fiber shown in the figure was not polished well, and the cracks are seen with confocal optical microscope.

Other uses of optical fibers

Light reflected from optical fiber illuminates exhibited model

Fibers are widely used in illumination applications. They are used as light guides in medical and other applications where bright light needs to be shone on a target without a clear line-of-sight path. In some buildings, optical fibers route sunlight from the roof to other parts of the building (see nonimaging optics). Optical fiber illumination is also used for decorative applications, including signs, art, toys and artificial Christmas trees. Swarovski boutiques use optical fibers to illuminate their crystal showcases from many different angles while only employing one light source. Optical fiber is an intrinsic part of the light-transmitting concrete building product, LiTraCon.

Optical fiber is also used in imaging optics. A coherent bundle of fibers is used, sometimes along with lenses, for a long, thin imaging device called an endoscope, which is used to view objects through a small hole. Medical endoscopes are used for minimally invasive exploratory or surgical procedures. Industrial endoscopes (see fiberscope or borescope) are used for inspecting anything hard to reach, such as jet engine interiors. Many microscopes use fiber-optic light sources to provide intense illumination of samples being studied.

In spectroscopy, optical fiber bundles transmit light from a spectrometer to a substance that cannot be placed inside the spectrometer itself, in order to analyze its composition. A spectrometer analyzes substances by bouncing light off of and through them. By using fibers, a spectrometer can be used to study objects remotely.[31][32][33]

An optical fiber doped with certain rare earth elements such as erbium can be used as the gain medium of a laser or optical amplifier. Rare-earth doped optical fibers can be used to provide signal amplification by splicing a short section of doped fiber into a regular (undoped) optical fiber line. The doped fiber is optically pumped with a second laser wavelength that is coupled into the line in addition to the signal wave. Both wavelengths of light are transmitted through the doped fiber, which transfers energy from the second pump wavelength to the signal wave. The process that causes the amplification is stimulated emission.

Optical fibers doped with a wavelength shifter collect scintillation light in physics experiments.

Optical fiber can be used to supply a low level of power (around one watt)[citation needed] to electronics situated in a difficult electrical environment. Examples of this are electronics in high-powered antenna elements and measurement devices used in high voltage transmission equipment.

The iron sights for handguns, rifles, and shotguns may use short pieces of optical fiber for contrast enhancement.

Fibers are widely used in illumination applications. They are used as light guides in medical and other applications where bright light needs to be shone on a target without a clear line-of-sight path. In some buildings, optical fibers route sunlight from the roof to other parts of the building (see nonimaging optics). Optical fiber illumination is also used for decorative applications, including signs, art, toys and artificial Christmas trees. Swarovski boutiques use optical fibers to illuminate their crystal showcases from many different angles while only employing one light source. Optical fiber is an intrinsic part of the light-transmitting concrete building product, LiTraCon.

Optical fiber is also used in imaging optics. A coherent bundle of fibers is used, sometimes along with lenses, for a long, thin imaging device called an endoscope, which is used to view objects through a small hole. Medical endoscopes are used for minimally invasive exploratory or surgical procedures. Industrial endoscopes (see fiberscope or borescope) are used for inspecting anything hard to reach, such as jet engine interiors. Many microscopes use fiber-optic light sources to provide intense illumination of samples being studied.

In spectroscopy, optical fiber bundles transmit light from a spectrometer to a substance that cannot be placed inside the spectrometer itself, in order to analyze its composition. A spectrometer analyzes substances by bouncing light off of and through them. By using fibers, a spectrometer can be used to study objects remotely.[31][32][33]

An optical fiber doped with certain rare earth elements such as erbium can be used as the gain medium of a laser or optical amplifier. Rare-earth doped optical fibers can be used to provide signal amplification by splicing a short section of doped fiber into a regular (undoped) optical fiber line. The doped fiber is optically pumped with a second laser wavelength that is coupled into the line in addition to the signal wave. Both wavelengths of light are transmitted through the doped fiber, which transfers energy from the second pump wavelength to the signal wave. The process that causes the amplification is stimulated emission.

Optical fibers doped with a wavelength shifter collect scintillation light in physics experiments.

Optical fiber can be used to supply a low level of power (around one watt)[citation needed] to electronics situated in a difficult electrical environment. Examples of this are electronics in high-powered antenna elements and measurement devices used in high voltage transmission equipment.

The iron sights for handguns, rifles, and shotguns may use short pieces of optical fiber for contrast enhancement.

Fiber optic sensors

Main article: Fiber optic sensor

Fibers have many uses in remote sensing. In some applications, the sensor is itself an optical fiber. In other cases, fiber is used to connect a non-fiberoptic sensor to a measurement system. Depending on the application, fiber may be used because of its small size, or the fact that no electrical power is needed at the remote location, or because many sensors can be multiplexed along the length of a fiber by using different wavelengths of light for each sensor, or by sensing the time delay as light passes along the fiber through each sensor. Time delay can be determined using a device such as an optical time-domain reflectometer.

Optical fibers can be used as sensors to measure strain, temperature, pressure and other quantities by modifying a fiber so that the property to measure modulates the intensity, phase, polarization, wavelength, or transit time of light in the fiber. Sensors that vary the intensity of light are the simplest, since only a simple source and detector are required. A particularly useful feature of such fiber optic sensors is that they can, if required, provide distributed sensing over distances of up to one meter.

Extrinsic fiber optic sensors use an optical fiber cable, normally a multi-mode one, to transmit modulated light from either a non-fiber optical sensor—or an electronic sensor connected to an optical transmitter. A major benefit of extrinsic sensors is their ability to reach otherwise inaccessible places. An example is the measurement of temperature inside aircraft jet engines by using a fiber to transmit radiation into a radiation pyrometer outside the engine. Extrinsic sensors can be used in the same way to measure the internal temperature of electrical transformers, where the extreme electromagnetic fields present make other measurement techniques impossible. Extrinsic sensors measure vibration, rotation, displacement, velocity, acceleration, torque, and twisting. A solid state version of the gyroscope, using the interference of light, has been developed. The fiber optic gyroscope (FOG) has no moving parts, and exploits the Sagnac effect to detect mechanical rotation.

Common uses for fiber optic sensors includes advanced intrusion detection security systems. The light is transmitted along a fiber optic sensor cable placed on a fence, pipeline, or communication cabling, and the returned signal is monitored and analysed for disturbances. This return signal is digitally processed to detect disturbances and trip an alarm if an intrusion has occurred.

Fibers have many uses in remote sensing. In some applications, the sensor is itself an optical fiber. In other cases, fiber is used to connect a non-fiberoptic sensor to a measurement system. Depending on the application, fiber may be used because of its small size, or the fact that no electrical power is needed at the remote location, or because many sensors can be multiplexed along the length of a fiber by using different wavelengths of light for each sensor, or by sensing the time delay as light passes along the fiber through each sensor. Time delay can be determined using a device such as an optical time-domain reflectometer.

Optical fibers can be used as sensors to measure strain, temperature, pressure and other quantities by modifying a fiber so that the property to measure modulates the intensity, phase, polarization, wavelength, or transit time of light in the fiber. Sensors that vary the intensity of light are the simplest, since only a simple source and detector are required. A particularly useful feature of such fiber optic sensors is that they can, if required, provide distributed sensing over distances of up to one meter.

Extrinsic fiber optic sensors use an optical fiber cable, normally a multi-mode one, to transmit modulated light from either a non-fiber optical sensor—or an electronic sensor connected to an optical transmitter. A major benefit of extrinsic sensors is their ability to reach otherwise inaccessible places. An example is the measurement of temperature inside aircraft jet engines by using a fiber to transmit radiation into a radiation pyrometer outside the engine. Extrinsic sensors can be used in the same way to measure the internal temperature of electrical transformers, where the extreme electromagnetic fields present make other measurement techniques impossible. Extrinsic sensors measure vibration, rotation, displacement, velocity, acceleration, torque, and twisting. A solid state version of the gyroscope, using the interference of light, has been developed. The fiber optic gyroscope (FOG) has no moving parts, and exploits the Sagnac effect to detect mechanical rotation.

Common uses for fiber optic sensors includes advanced intrusion detection security systems. The light is transmitted along a fiber optic sensor cable placed on a fence, pipeline, or communication cabling, and the returned signal is monitored and analysed for disturbances. This return signal is digitally processed to detect disturbances and trip an alarm if an intrusion has occurred.

Fiber optic Application

Optical fiber communication

Main article: Fiber-optic communication

Optical fiber can be used as a medium for telecommunication and computer networking because it is flexible and can be bundled as cables. It is especially advantageous for long-distance communications, because light propagates through the fiber with little attenuation compared to electrical cables. This allows long distances to be spanned with few repeaters. Additionally, the per-channel light signals propagating in the fiber have been modulated at rates as high as 111 gigabits per second by NTT,[23][24] although 10 or 40 Gbit/s is typical in deployed systems.[25][26] Each fiber can carry many independent channels, each using a different wavelength of light (wavelength-division multiplexing (WDM)). The net data rate (data rate without overhead bytes) per fiber is the per-channel data rate reduced by the FEC overhead, multiplied by the number of channels (usually up to eighty in commercial dense WDM systems as of 2008). The current laboratory fiber optic data rate record, held by Bell Labs in Villarceaux, France, is multiplexing 155 channels, each carrying 100 Gbit/s over a 7000 km fiber.[27] Nippon Telegraph and Telephone Corporation has also managed 69.1 Tbit/s over a single 240 km fiber (multiplexing 432 channels, equating to 171 Gbit/s per channel).[28] Bell Labs also broke a 100 Petabit per second kilometer barrier (15.5 Tbit/s over a single 7000 km fiber).[29]

For short distance applications, such as a network in an office building, fiber-optic cabling can save space in cable ducts. This is because a single fiber can carry much more data than electrical cables such as standard category 5 Ethernet cabling, which typically runs at 1 Gbit/s. Fiber is also immune to electrical interference; there is no cross-talk between signals in different cables, and no pickup of environmental noise. Non-armored fiber cables do not conduct electricity, which makes fiber a good solution for protecting communications equipment in high voltage environments, such as power generation facilities, or metal communication structures prone to lightning strikes. They can also be used in environments where explosive fumes are present, without danger of ignition. Wiretapping (in this case, fiber tapping) is more difficult compared to electrical connections, and there are concentric dual core fibers that are said to be tap-proof

Main article: Fiber-optic communication

Optical fiber can be used as a medium for telecommunication and computer networking because it is flexible and can be bundled as cables. It is especially advantageous for long-distance communications, because light propagates through the fiber with little attenuation compared to electrical cables. This allows long distances to be spanned with few repeaters. Additionally, the per-channel light signals propagating in the fiber have been modulated at rates as high as 111 gigabits per second by NTT,[23][24] although 10 or 40 Gbit/s is typical in deployed systems.[25][26] Each fiber can carry many independent channels, each using a different wavelength of light (wavelength-division multiplexing (WDM)). The net data rate (data rate without overhead bytes) per fiber is the per-channel data rate reduced by the FEC overhead, multiplied by the number of channels (usually up to eighty in commercial dense WDM systems as of 2008). The current laboratory fiber optic data rate record, held by Bell Labs in Villarceaux, France, is multiplexing 155 channels, each carrying 100 Gbit/s over a 7000 km fiber.[27] Nippon Telegraph and Telephone Corporation has also managed 69.1 Tbit/s over a single 240 km fiber (multiplexing 432 channels, equating to 171 Gbit/s per channel).[28] Bell Labs also broke a 100 Petabit per second kilometer barrier (15.5 Tbit/s over a single 7000 km fiber).[29]

For short distance applications, such as a network in an office building, fiber-optic cabling can save space in cable ducts. This is because a single fiber can carry much more data than electrical cables such as standard category 5 Ethernet cabling, which typically runs at 1 Gbit/s. Fiber is also immune to electrical interference; there is no cross-talk between signals in different cables, and no pickup of environmental noise. Non-armored fiber cables do not conduct electricity, which makes fiber a good solution for protecting communications equipment in high voltage environments, such as power generation facilities, or metal communication structures prone to lightning strikes. They can also be used in environments where explosive fumes are present, without danger of ignition. Wiretapping (in this case, fiber tapping) is more difficult compared to electrical connections, and there are concentric dual core fibers that are said to be tap-proof

Optical fiber history

Fiber optics, though used extensively in the modern world, is a fairly

simple, and relatively old, technology. Guiding of light by refraction,

the principle that makes fiber optics possible, was first demonstrated

by Daniel Colladon and Jacques Babinet in Paris in the early 1840s. John Tyndall included a demonstration of it in his public lectures in London, 12 years later.[3] Tyndall also wrote about the property of total internal reflection

in an introductory book about the nature of light in 1870: "When the

light passes from air into water, the refracted ray is bent towards the perpendicular... When the ray passes from water to air it is bent from

the perpendicular... If the angle which the ray in water encloses with

the perpendicular to the surface be greater than 48 degrees, the ray

will not quit the water at all: it will be totally reflected at

the surface.... The angle which marks the limit where total reflection

begins is called the limiting angle of the medium. For water this angle

is 48°27', for flint glass it is 38°41', while for diamond it is

23°42'."[4][5] Unpigmented human hairs have also been shown to act as an optical fiber

Sunday, April 15, 2012

Packet Format Overview

Transactions consist of Requests and Completions, which are communicated using packets. Figure 2-2 shows a high level serialized view of a Transaction Layer Packet (TLP), consisting of one or more optional TLP Prefixes, a TLP header, a data payload (for some types of packets), and an optional TLP digest. Figure 2-3 shows a more detailed view of the TLP. The following sections of this chapter define the detailed structure of the packet headers and digest.

Serial View of a TLP

PCI Express conceptually transfers information as a serialized stream of bytes as shown in Figure 2-2. Note that at the byte level, information is transmitted/received over the interconnect with the leftmost byte of the TLP as shown in Figure 2-2 being transmitted/received first (byte 0 if one or more optional TLP Prefixes are present else byte H). Refer to Section 4.2 for details on how individual bytes of the packet are encoded and transmitted over the physical media.

Detailed layouts of the TLP Prefix, TLP Header and TLP Digest (presented in generic form in Figure 2-3) are drawn with the lower numbered bytes on the left rather than on the right as has traditionally been depicted in other PCI specifications. The header layout is optimized for performance on a serialized interconnect, driven by the requirement that the most time critical information be transferred first. For example, within the TLP header, the most significant byte of the address field is transferred first so that it may be used for early address decode.

Generic TLP Format

Payload data within a TLP is depicted with the lowest addressed byte (byte J in Figure 2-3) shown to the upper left. Detailed layouts depicting data structure organization (such as the Configuration Space depictions in Chapter 7) retain the traditional PCI byte layout with the lowest addressed byte shown on the right. Regardless of depiction, all bytes are conceptually transmitted over the Link in increasing byte number order.

Depending on the type of a packet, the header for that packet will include some of the following types of fields:

Depending on the type of a packet, the header for that packet will include some of the following types of fields:

- Format of the packet

- Type of the packet

- Length for any associated data

- Transaction Descriptor, including:

- Transaction ID

- Attributes

- Traffic Class

- Address/routing information

- Byte Enables

- Message encoding

- Completion status

Address Spaces, Transaction Types, and Usage

Transactions form the basis for information transfer between a Requester and Completer. Four address spaces are defined, and different Transaction types are defined, each with its own unique intended usage, as shown in Table 2-1.

Details about the rules associated with usage of these address formats and the associated TLP formats are described later in this chapter.

Read Request/Completion

Write Request

AtomicOp Request/Completion

Memory Transactions use two different address formats:

Short Address Format: 32-bit address

Long Address Format: 64-bit address

Future revisions of this specification are expected to deprecate the use of I/O Space. I/O

Transactions include the following types:

Read Request/Completion

Write Request/Completion

I/O Transactions use a single address format:

Short Address Format: 32-bit address

Configuration Transactions include the following types:

Read Request/Completion

Write Request/Completion

In addition to the specified Messages, PCI Express provides support for vendor-defined Messages using specified Message codes. The definition of specific vendor-defined Messages is outside the scope of this document.

This specification establishes a standard framework within which vendors can specify their own vendor-defined Messages tailored to fit the specific requirements of their platforms (see Sections 2.2.8.5 and 2.2.8.7).

Note that these vendor-defined Messages are not guaranteed to be interoperable with components from different vendors.

Transaction Types for Different Address Spaces

| Address Space | Transaction Types | Basic Usage |

| Memory | Read Write | Transfer data to/from a memory-mapped location. |

| I/O | Read Write | Transfer data to/from an I/O-mapped location |

| Configuration | Read Write | Device Function configuration/setup |

| Message | Baseline(including Vendor–defined) | From event signaling mechanism to general purpose messaging |

Memory Transactions

Memory Transactions include the following types: Read Request/Completion

Write Request

AtomicOp Request/Completion

Memory Transactions use two different address formats:

Short Address Format: 32-bit address

Long Address Format: 64-bit address

I/O Transactions

PCI Express supports I/O Space for compatability with legacy devices which require their use.Future revisions of this specification are expected to deprecate the use of I/O Space. I/O

Transactions include the following types:

Read Request/Completion

Write Request/Completion

I/O Transactions use a single address format:

Short Address Format: 32-bit address

Configuration Transactions

Configuration Transactions are used to access configuration registers of Functions within devices.Configuration Transactions include the following types:

Read Request/Completion

Write Request/Completion

Message Transactions

The Message Transactions, or simply Messages, are used to support in-band communication of events between devices.In addition to the specified Messages, PCI Express provides support for vendor-defined Messages using specified Message codes. The definition of specific vendor-defined Messages is outside the scope of this document.

This specification establishes a standard framework within which vendors can specify their own vendor-defined Messages tailored to fit the specific requirements of their platforms (see Sections 2.2.8.5 and 2.2.8.7).

Note that these vendor-defined Messages are not guaranteed to be interoperable with components from different vendors.

Transaction Layer Overview

Layering Diagram Highlighting the Transaction Layer

At a high level, the key aspects of the Transaction Layer are:

- A pipelined full split-transaction protocol

- Mechanisms for differentiating the ordering and processing requirements of Transaction Layer Packets (TLPs)

- Credit-based flow control

- Optional support for data poisoning and end-to-end data integrity detection.

The Transaction Layer comprehends the following:

TLP construction and processing

Association of transaction-level mechanisms with device resources including:

• Flow Control

• Virtual Channel management

Rules for ordering and management of TLPs

• PCI/PCI-X compatible ordering

• Including Traffic Class differentiation

This chapter specifies the behaviors associated with the Transaction Layer.

TLP construction and processing

Association of transaction-level mechanisms with device resources including:

• Flow Control

• Virtual Channel management

Rules for ordering and management of TLPs

• PCI/PCI-X compatible ordering

• Including Traffic Class differentiation

This chapter specifies the behaviors associated with the Transaction Layer.

Inter-Layer Interfaces

Transaction/Data Link Interface

The Transaction to Data Link interface provides:Byte or multi-byte data to be sent across the Link

• Local TLP-transfer handshake mechanism

• TLP boundary information

Requested power state for the Link

PCI EXPRESS BASE SPECIFICATION, REV. 2.1

The Data Link to Transaction interface provides:

Byte or multi-byte data received from the PCI Express Link

TLP framing information for the received byte

Actual power state for the Link

Link status information

Data Link/Physical Interface

The Data Link to Physical interface provides:Byte or multi-byte wide data to be sent across the Link

• Data transfer handshake mechanism

• TLP and DLLP boundary information for bytes

Requested power state for the Link

The Physical to Data Link interface provides:

Byte or multi-byte wide data received from the PCI Express Link

TLP and DLLP framing information for data

Indication of errors detected by the Physical Layer

Actual power state for the Link

Connection status information

Physical Layer Services

Interface initialization, maintenance control, and status tracking:

- Reset/Hot-Plug control/status

- Interconnect power management

- Width and Lane mapping negotiation

- Polarity reversal

- 8b/10b encoding/decoding

- Embedded clock tuning and alignment

- Transmission circuits

- Reception circuits

- Elastic buffer at receiving side

- Multi-Lane de-skew (for widths > x1) at receiving side

Friday, April 13, 2012

Data Link Layer Services

The Data Link Layer is responsible for reliably exchanging information with its counterpart on the opposite side of the Link.

Initialization and power management services:

Initialization and power management services:

- Accept power state Requests from the Transaction Layer and convey to the Physical Layer

- Convey active/reset/disconnected/power managed state to the Transaction Layer

- CRC generation

- Transmitted TLP storage for Data Link level retry

- Error checking

- TLP acknowledgment and retry Messages

- Error indication for error reporting and logging

Transaction Layer Services

The Transaction Layer, in the process of generating and receiving TLPs, exchanges Flow Control information with its complementary Transaction Layer on the other side of the Link. It is also responsible for supporting both software and hardware-initiated power management.